![]() Speaker: Keli Du

Speaker: Keli Du

![]() Affiliation:

Affiliation:

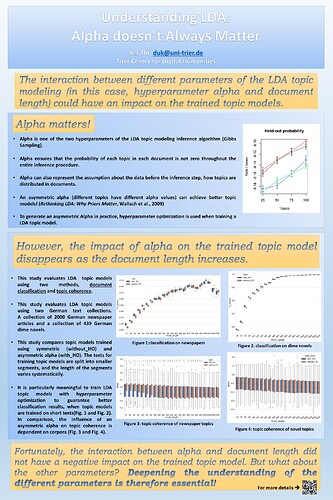

Title: Understanding LDA: Alpha doesn’t Always Matter

Abstract: Latent Dirichlet Allocation (LDA) topic modeling has been widely used in Digital Humanities (DH) in recent years to explore numerous unstructured text data. When topic modeling is used, one has to deal with many parameters that can influence the result of the modeling. The present research has evaluated the influence of hyperparameter alpha in topic modeling on two German text collections from two perspectives, document classification and topic coherence. Previous study (Wallach et al., 2009) has shown that, using an asymmetric alpha allows the model to better fit the text data by encouraging some topics to be more dominant than others and therefore can achieve better topic models. However, this study led us to the finding that an asymmetric alpha could influence the results of topic modeling positively, but only when the documents are short.